In 2014 anyone serious about search and online presence needs to create an online persona and establish a brand online.

The way your brand presence is established is an offshoot of the SEO efforts put in by your SEO company.

We keep on analyzing the analytic reports and regularly focus on visits (Now Sessions) , unique visitors (Now called Users) , rankings, bounce rate, landing pages, search queries, etc to judge the SEO performance but SEO has another very important deliverable i.e ‘To Establish Your Brand Online’ with every footprint they help you create on the web.

The task of establishing the brand presence is not an independent task but is woven into the on-page, off-page and technical factors of the SEO deliverables.

Every little task performed by the SEO to make the SEO campaign successful helps to promote your brand or harms the brand presence.

10 Things To Check If Your SEO Is Establishing Your Brand Online

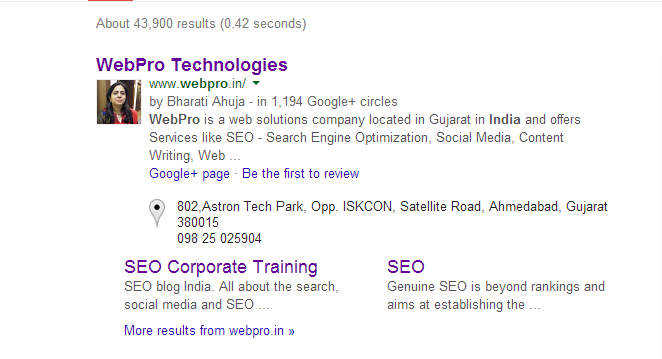

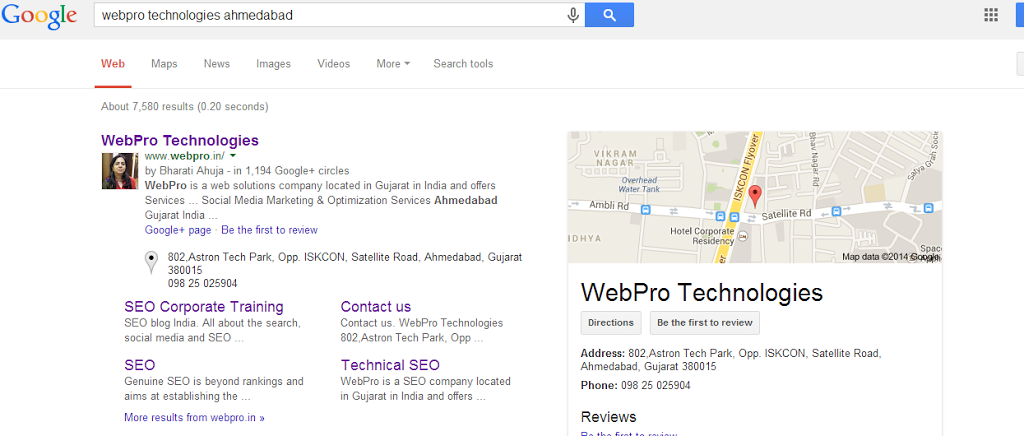

Do a brand name search and see the results. If the SEO campaign is in the right direction the search listings on the first page should be about the pages where your brand name is mentioned or is majorly correlated to. Many times it will show the social media pages of the company and of course the website/blog will be listed in SERPs

2. Use Google Alerts to monitor your brand.

Apply for Google Alerts for the brand name search and be notified whenever people mention the brand name on the web.

3. Check the analytics trend for branded search queries and keywords

Use the filter option in Google Analytics to find out the search queries and keywords which have the brand name included in.

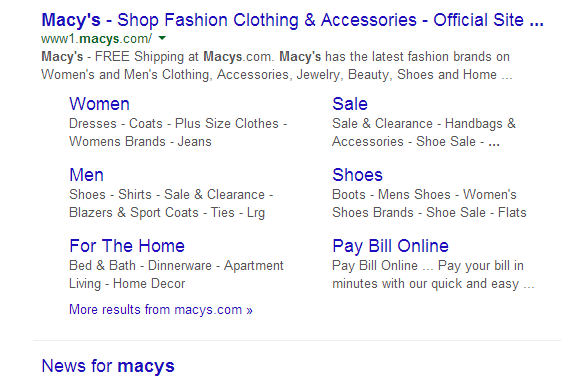

4. Check Sitelinks In SERPsDo a search for your brand name or your brand name + Your Country

If Google has allocated site links then the search results may be as above. The site links can be more in no. as seen below:

5. Schema For Logo And Address

Check if the logo schema has been added to the page . This helps in correlating the logo image of the company to the brand.

The code for logo should be as follows:

<div itemscope itemtype="http://schema.org/Organization">

<a itemprop="url" href="http://www.example.com/">Home</a>

<img itemprop="logo" src="http://www.example.com/logo.png" />

</div>

The code for Address is as follows:

<div itemscope itemtype="http://schema.org/PostalAddress">

<span itemprop="name">Google Inc.</span>

P.O. Box<span itemprop="postOfficeBoxNumber">1234</span>

<span itemprop="addressLocality">Mountain View</span>,

<span itemprop="addressRegion">CA</span>

<span itemprop="postalCode">94043</span>

<span itemprop="addressCountry">United States</span>

</div>

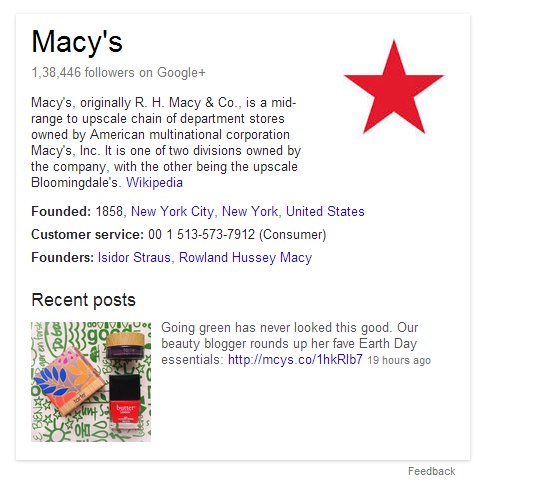

6. Knowledge Graph

With the Knowledge Graph, Google is trying to make search more intelligent. The results are more relevant because the search engine understands these entities, and the nuances in their meaning, in the same way the user does. This makes the search engine "think" more like the user.

Knowledge Graph Example:

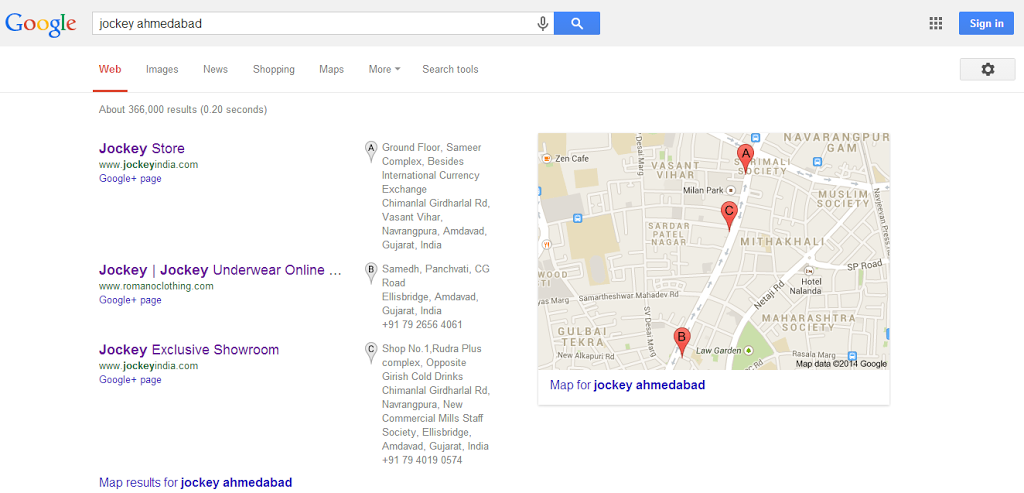

7. Local Search

Check if you have a presence on local search and for queries having a local intent. Search for your brand name or any targeted search query and your local area name and see if you have a presence on local search results.

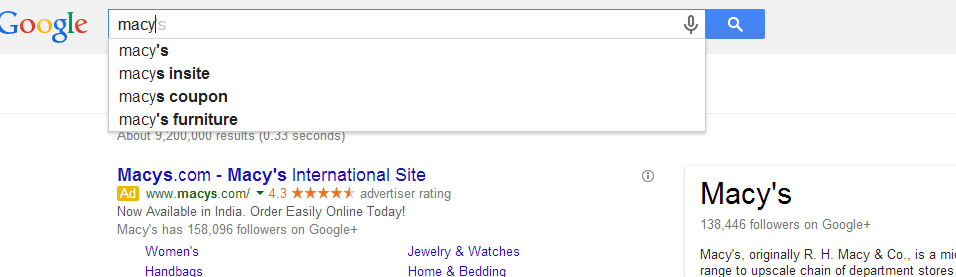

8. Google Suggest Auto Complete

Check and see what the Google Auto Complete suggests for queries related to your brand. This reflects the reputation the brand is gathering and getting correlated to over a period of time on the web.

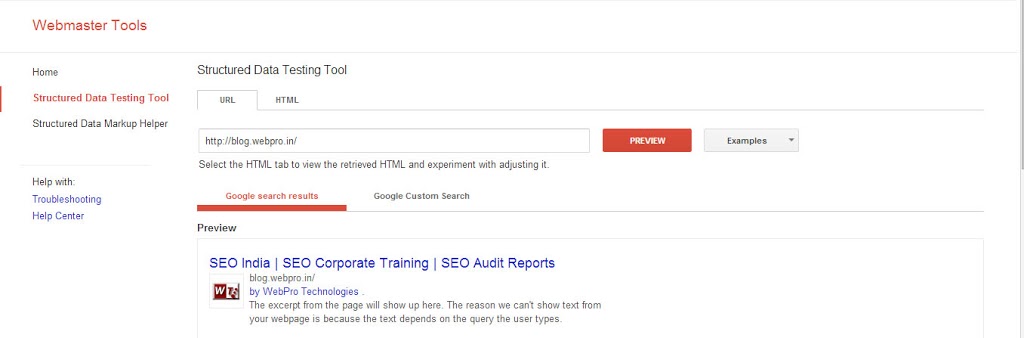

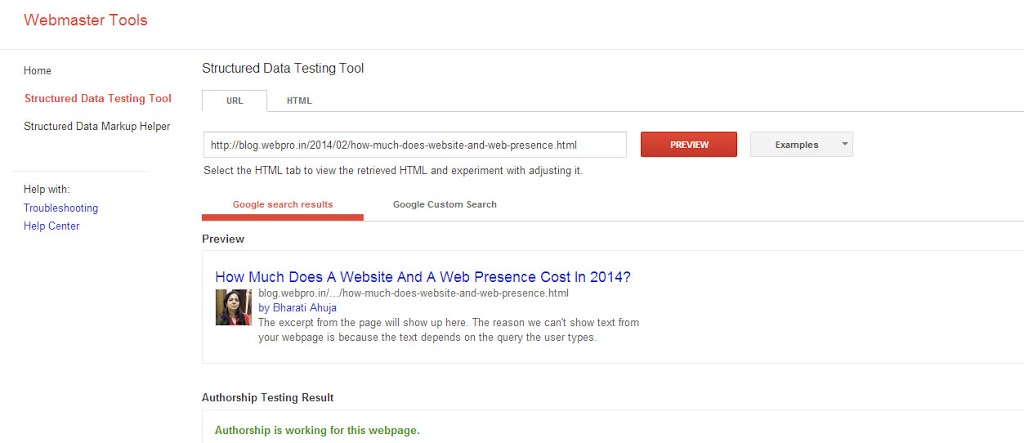

9. Publisher Markup

Publisher Markup should ideally be added to the home page of the blog and the Authorship Markup to the blog post pages. This gives due credit to the company and the authors of the blog.

This correlates the company logo and gets displayed in the SERPs when the home page URL of the blog is listed and the blog post pages when listed show the author image.Check this using the Rich Snippets Tool:

10. Social Media Account Page Designs

Check if the Social Media Account pages convey the brand message and display the logo clearly.Any genuine SEO will give priority to these aspects when working on the On-Page, Off-Page and Technical factors of SEO during an SEO Campaign and as a website owner your priority should be to check how your brand evolves on the web first and then check for other metrics.

Related Blog Posts :