What Is BERT?

Since the inception of Google, Google has been always trying to make search better for the user in terms of the quality of search results and the display of the search results on the search results Page (The SERPS).

The quality of search results can only be better if the search query is understood correctly by the search engine. Many times the user also finds it difficult to formulate a search query to exactly meet his requirement. The user might spell it differently or may not know the right words to use for search. This makes it more difficult for the search engine to display relevant results.

Google Says,

“At its core, Search is about understanding language. It’s our job to figure out what you’re searching for and surface helpful information from the web, no matter how you spell or combine the words in your query. While we’ve continued to improve our language understanding capabilities over the years, we sometimes still don’t quite get it right, particularly with complex or conversational queries. In fact, that’s one of the reasons why people often use “keyword-ese,” typing strings of words that they think we’ll understand, but aren’t actually how they’d naturally ask a question.”

In 2018, Google opensourced a new technique for natural language processing (NLP) pre-training called Bidirectional Encoder Representations from Transformers, or BERT. With this release, anyone in the world can train their own state-of-the-art question answering system (or a variety of other models).

This enables the understanding of the relation and context of the words i.e tries to understand the meaning of the words in the search query rather than do word to word mapping before displaying the search results.

This not only requires the advancement in the software but also the hardware used has to be much advanced. So, for the first time Google is using the latest Cloud TPUs to serve search results and get you more relevant information quickly.

By implementing BERT Google will be able to understand better 1 in 10 searches in US in English. Google intends to bring this to more languages in future. The main goal behind this is to understand the correlation of the prepositions like ‘to’ and other such words in the search query and establish a correct context to display relevant search results.

Before launching and implementing BERT for search on a wide scale and for many languages Google is testing and trying to understand the intent behind the search query fired by the user.

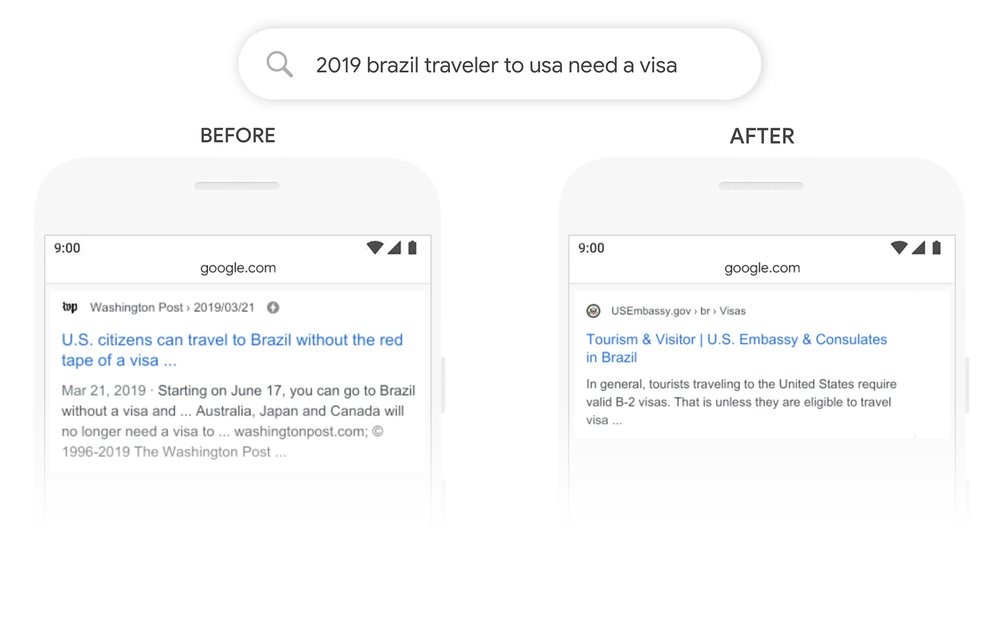

Google has shared some examples as below:

Here’s a search for “2019 brazil traveler to usa need a visa.” The word “to” and its relationship to the other words in the query are particularly important to understanding the meaning. It’s about a Brazilian traveling to the U.S., and not the other way around. Previously, our algorithms wouldn't understand the importance of this connection, and we returned results about U.S. citizens traveling to Brazil. With BERT, Search is able to grasp this nuance and know that the very common word “to” actually matters a lot here, and we can provide a much more relevant result for this query.

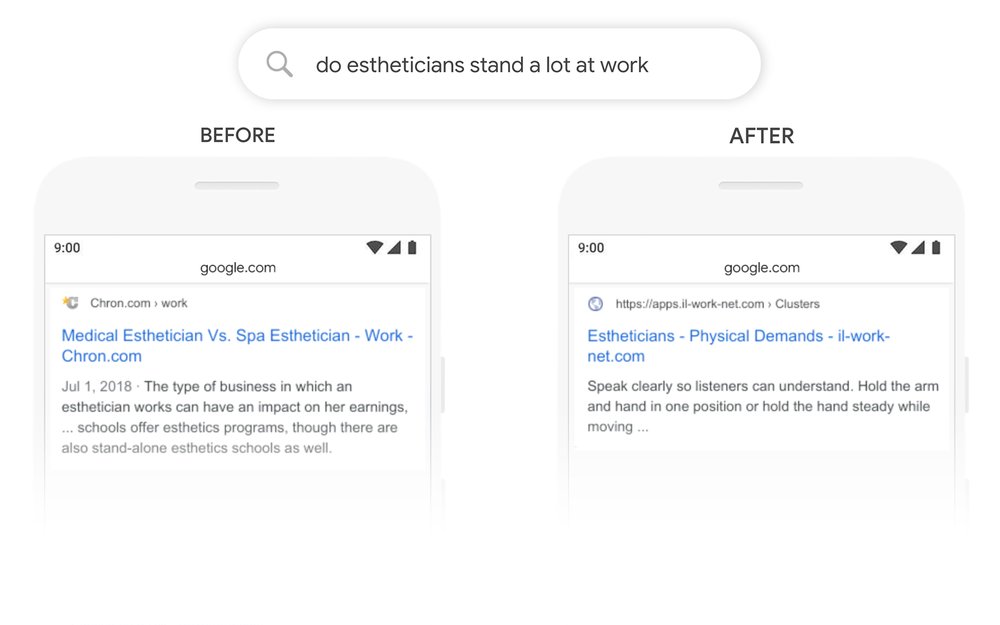

Let’s look at another query: “do estheticians stand a lot at work.” Previously, our systems were taking an approach of matching keywords, matching the term “stand-alone” in the result with the word “stand” in the query. But that isn’t the right use of the word “stand” in context. Our BERT models, on the other hand, understand that “stand” is related to the concept of the physical demands of a job, and displays a more useful response.

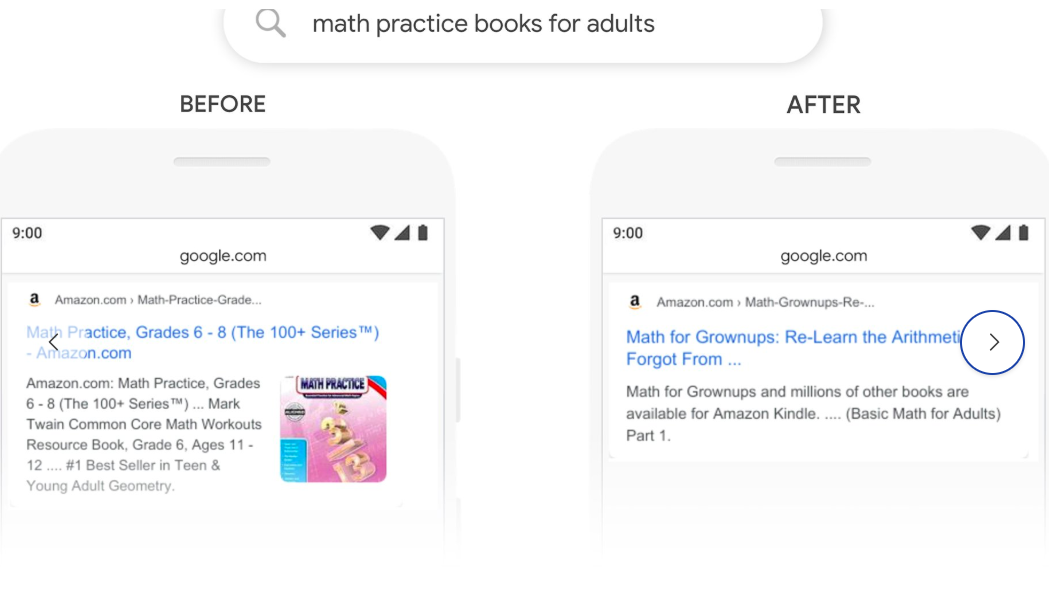

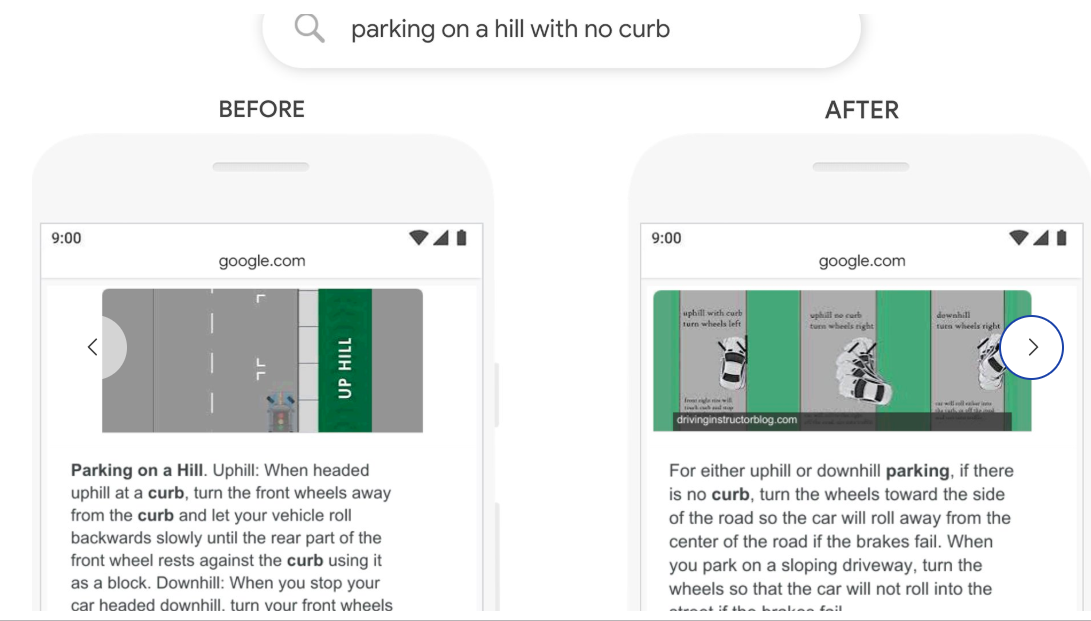

Some more examples about the nuances of the language which usually are not correlated correctly by the search engines for relevant search results.

The above examples of before and after the implementation of BERT clearly show the improvement in the search results. This is also being applied to featured snippets.

BERT and SEO - Do we need to optimize for BERT?

After reading this, I am sure the SEOs have the most logical question – How Do We Optimize for BERT ?

The plain and simple answer is – We do not have to optimize differently for BERT. We just need to add more informative relevant content to get a more targeted and extensive search presence.

Look what the SEO experts have to say:

There's nothing to optimize for with BERT, nor anything for anyone to be rethinking. The fundamentals of us seeking to reward great content remain unchanged.

— Danny Sullivan (@dannysullivan) October 28, 2019

In a recent hangout John Mueller added to what Danny had tweeted regarding BERT. (Read Tweet embedded above)

Here was the question posed to John Mueller:

Will you tell me about the Google BERT Update? Which types of work can I do on SEO according to the BERT algorithms?

John Muller’s explanation on the purpose of the BERT algorithm:

I would primarily recommend taking a look at the blog post that we did around this particular change. In particular, what we’re trying to do with these changes is to better understand text. Which on the one hand means better understanding the questions or the queries that people send us. And on the other hand better understanding the text on a page. The queries are not really something that you can influence that much as an SEO.

The text on the page is something that you can influence. Our recommendation there is essentially to write naturally. So it seems kind of obvious but a lot of these algorithms try to understand natural text and they try to better understand like what topics is this page about. What special attributes do we need to watch out for and that would allow use to better match the query that someone is asking us with your specific page. So, if anything, there’s anything that you can do to kind of optimize for BERT, it’s essentially to make sure that your pages have natural text on them…

“..and that they’re not written in a way that…”

“Kind of like a normal human would be able to understand. So instead of stuffing keywords as much as possible, kind of write naturally.”

We as website owners and SEOs have to understand that Google constantly keeps on working to make search and the search experience better for its users. BERT is one such exercise in that direction.

It is not an algorithmic update directly affecting any of the on-page , off-page or technical factors. BERT is simply aiming to understand and corelate the search query more accurately.

According to Google : Language understanding remains an ongoing challenge and no matter how hard they work in understanding the search queries better, they are always bombarded with surprises with time to time and this makes them go out of their comfort zone again.

As BERT tries to understand search queries better and thereby tries to give more relevant results, the SEO factors do not get directly influenced by its implementation. The only thing that has to be considered is the quality content which has to be regularly added to the site to keep it relevant and corelate to more and more search queries.

January 20, 2020