I am sure we all in the web arena have posted comments on blogs, written blog posts or shared and had conversations on social media sites either at a personal level or at a professional level. Lately, I have been in the retro mood since I started working on server logs for SEO analysis.

I think it is a good thing to rewind the tape of life sometimes and get some insight about our past actions and clear our mind to plan for the future. Hence I decided to focus on the foot prints created by WebPro Technologies by way of blog commenting in the past. This is not a client report so there are no metrics to monitor but a true self analysis to be done to know what was discussed and what was quoted by us on other blogs and social media sites and how much has been adhered to, by us . This also gives us a chance to analyze what our views had been in the past as compared to the present changing scenario.

I think it is a good thing to rewind the tape of life sometimes and get some insight about our past actions and clear our mind to plan for the future. Hence I decided to focus on the foot prints created by WebPro Technologies by way of blog commenting in the past. This is not a client report so there are no metrics to monitor but a true self analysis to be done to know what was discussed and what was quoted by us on other blogs and social media sites and how much has been adhered to, by us . This also gives us a chance to analyze what our views had been in the past as compared to the present changing scenario.

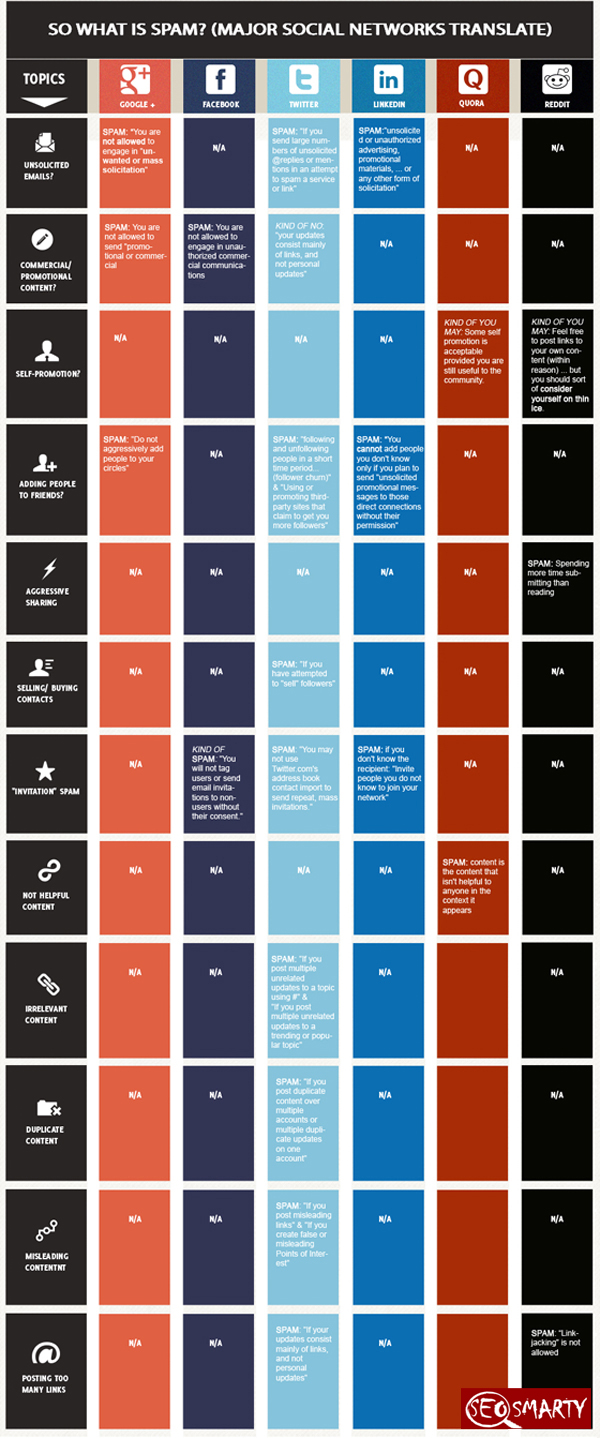

Post Panda and Penguin updates there are umpteen no. of posts on natural links and link pruning but we have always been of the opinion that the term “Link Buiding” itself is wrong you do not build natural links, they get built in the process of the quality footprints you make on the sands of the web during your web journey.

One of the ways to judge the knowledge and ideologies of an SEO company is to read about what they have written in the past by way of comments, blog posts, social media conversations, reviews and on forum discussions about various topics and issues and see if they still have the consistency in what they say and how those opinions in the past have shaped up in the real world as of today.

After all the words written on the canvas of the web on various platforms are not mere words but content in different forms around which the whole web world revolves. Authentic, valuable content should stand the test of time and add value to the authority factor of the author. I have been commenting on and discussing many topics related to the search industry and have also been sharing links related to posts written by me if there was a strong correlation with the blog topic and never bothered if there was a 'no follow' attribute on the comment links or if I got any amount of thumbs down for it. As my main purpose was to put forward an opinion which I strongly believed in or felt about.

The past content posted by a person can say a lot about the knowledge, beliefs and long term goals about that persona. This can reflect the solidarity of the viewpoints made by that person and if the present scenario can tell us if they have adhered to that and stood by what they had said and also proves that if they have any coherence in what they say and what they do. This kind of check can also make people think before they post and becomes a self check for ensuring that quality content is published on the web.

Our business associate @wasimalrayes suggested that this could be a comment archive which can be added to the site and updated with every comment made on the web. I think it is a good idea which not only adds your web voice to your site but also acts as a self check tool for responsible content addition to the WWW.

We all have a blog archive, why not a coment archive too as after all comments also are mini blog posts posted by us on the web which reflect our opinion and perspective regarding that relevant topic?

What do you think about the ‘Comment Archive ‘ section being added to the site?

We would like to share some of the past comments , blog posts and social media conversations we have had on the web regarding various topics. Since the blogosphere is brimming with the posts regarding the Penguin and the Panda updates I’ll start with Link Building:

Topic Link Building :

Some Blog Comments Made By Us In The Past Reflecting Our Views On Link Building:

Our Comment

WebProTechnologies | January 3rd, 2012

All the predictions for this year are spot on. I agree to all the points predicted.

Regarding #3 I think Google might just give us a surprise this year by giving less importance to inbound links . Only the links which will come from trusted and high authority sites and editorial links will matter and will be taken into account. The major focus will be on the social media signals which will reflect the trust and the authority factor. Hence, in what context the links are being shared on social media and the discussions and reactions surrounding it will make a big impact.

Hence, stop the link building nuisance and focus on building quality content (in all forms, images, text, video, audio, etc. and share it on social media) and let the natural links get built...

Comment :

February 20, 2010 at 10:24 am

Totally agree.

In My opinion everybody has just gone too far thinking only about how to get more and more links. I am sure when the PageRank concept must have been framed, the main purpose must have been to judge the true goodwill and popularity of the website in direct proportion to the no. of inbound links it has.

But with all these ethical and unethical methods of gaining more and more links the whole purpose is defeated.

If the site is having good informative content and with ethical SEO practices it ranks high in the search engines then it automatically gets a lot of links from various sources.

As the main purpose of a genuine searcher is to search for what is available globally and locally. Once the searcher finds that it surely gets added and linked by him in various ways.

Instead all the energies and efforts should be concentrated on building the website qualitatively in various ways by adding more varied content.

Don’t run after links. Let them come to your website genuinely.

Our Comment:

WebProTechnologies | May 21st, 2010

Aptly put at the very begining of this post that link building is a task which is detested by all .

I am of the opinion that the term 'link building' itself is an incorrect term. Links do not have to be built but they should get built naturally in the process as your website starts getting a wider web presence and preference.

As every link is like a vote to your site and goodwill of your company and that has to be earned as part of the web journey of the website.

If we focus on the quality content, have a good site internal linking architecture, have a site which is visitor friendly as well as robot friendly then getting high SERPs is not a difficult task.

Once you have high SERPs trust me there will loads of directories and portals adding your site in their listings even without you knowing about it, as they too are looking for quality listings.

Once upon a time the dmoz listing was something that you always wished for once you submitted your site in dmoz as that surely was a valuable link. I dont know if it still has that importance but I still manually add each site to dmoz.

Apart from a good qualitative site in all respects other genuine methods of gaining natural inbound links as your website goes from one milestone to another are as follows:

Focus all your efforts on making the site informative, qualitative and content rich to get links automatically.·

Do not neglect the On-Page Optimization Basics and just go after links. (Very important from the SEO perspective)·

Participate in social media networks for discussions and sharing of information and mention links to the relevant pages to your website. (It need not be the Home Page always)·

Have a social book marking button on your website.

Make RSS feeds available on your website.

Issue Press Releases periodically.

Our Comment:

WebProTechnologies | May 31st, 2011

Well, despite all the thumbs down my opinion still remains the same. Your quality content on your website and quality web presence on all the search options, blogs, discussions, social media, etc. will always be rewarded in an increasing manner in the long run by any search engine and will result to inbound targeted traffic.

As we do a fairly good job on SEO and rankings without focusing on link building but in the process educate and train our clients to effectively maintain their blogs, and social media accounts and in the bargain they end up getting quality links and it has worked for us.

Our Archived Blog Posts On Link Building:

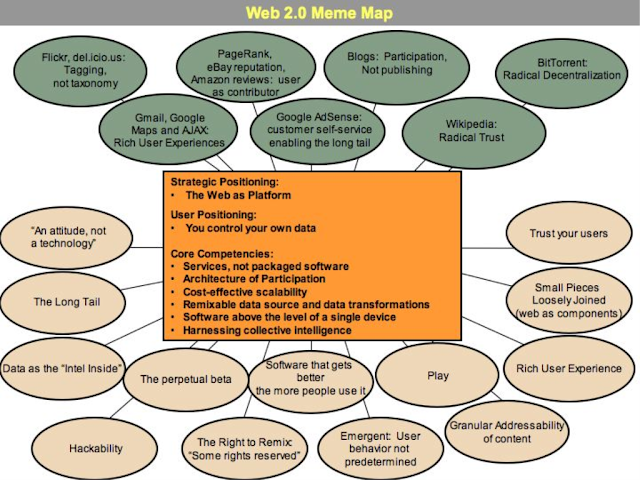

Topic 2 Social And Search Integration:

Blog Article / Social Media Post

Our Comment:

Yes initially Altavista was THE SEARCH ENGINE and keyword spam was something that Google had to work on to improve the quality of search results for which they came up with the PageRank Technology to add value and quality to search results.

But as every coin has 2 sides this innovation also gave birth to the link building spam and despite the improvement in the search results which established Google as the top most search engine, it polluted the web with unnecessary content clutter.

But as people kept on flocking the social media sites the search engines thought of using the public opinion as the criteria for quality and word of mouth. How well the search engines will integrate the social media signals only time will tell.

But it is for sure that this will ensure more genuineness as you cannot manipulate public opinion. SEO is what you say about your company social media is what others say about your company. When both these messages are in sync a credibility is established. Hence the authority, credibility, WOM and an overall presence is the demand of the day for true SEO , which in the long run will ensure natural and quality inbound links on its own.

So first work on content, establish an identity, authority and an online credibility and then the links will follow. And if we go to see that was the main goal of the PageRank technology to check how many people vouch for a certain page content but with link spam it got negated . Now with social media signals and focus on quality content via the Panda Update this will surely be taken care off to a great extent.

The best way to achieve great online presence will be to have an equally great offline and real time business presence :http://blog.webpro.in/2011/10/best-way-to-assure-quality-content-is.html

I will not be surprised if in the coming year the blogosphere gets bombarded with blogpost meteors on "THE DEATH OF THE SPAMMY LINK BUILDING INDUSTRY" instead of SEO being dead.Blog Article / Social Media Post

Our Comment:

Bharati Ahuja 11th January 2012

I think the blending of social results in search is not only the inevitable evolution of search but the reflection of what took place when civilizations evolved. We can just say that the stone age of search is over and now search even has the ability to reflect what people in your community are talking about and recommending. It is basic human nature to search for a want and then discuss with peers about their opinions and then take a decision. Since ages we have been doing this but now we have to just adapt ourselves to the virtual world for this kind of an action.

To a certain extent I believe that if Google wants to improve the quality of search results and combat the spam on the web then yes, it is highly essential that the search engine can access data from a resource it has full control on. But, from the search engine perspective only time will tell how well Google succeeds in integrating the social signals from other social media sites from all over the web else with the kind of hold Google has over the search market it is going to be, Google Google all the way…

But its surely not the end of SEO. In fact all these changes are taking SEO to a more qualitative level.

Archived Blog Posts On Our Blog:

http://blog.webpro.in/2011/02/integration-of-social-and-search.html

http://blog.webpro.in/2010/06/search-seo-and-social-media-integration.html

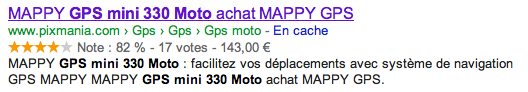

Topic 3 “Not Provided Keyword Data”

Our Guest Post On The Topic:

Archived Blog Posts On Our Blog:

http://blog.webpro.in/2011/11/search-queries-googles-encrypted-not.htmlU

Blog Article / Social Media Post

Bharati Ahuja Jan 9, 2012I think this piece is a great summary about how Google has been offering support to SEOs right from start but can do much more as they have all the data now in fact the data about social signals too.The awareness of SEO has also improved a period of time and if Google at this stage continues to share more and more information it will become increasingly difficult for Google to maintain and improve the quality of search results. We saw that by 2010 the content and link spam had reached to a great extent for which Google had to come up with the Panda Update.

IMHO especially with regard to Keyword Referrer Data:

2011 was a year of changes and I think it is a period of transition to a better web and better search results as SEO is much beyond keywords and rankings.

When the businesses are at a loss for the complete keyword data the focus is shifted to the search queries in WMT which have a good CTR which is a true measure of quality over quantity.

This restriction makes the website owner think from a larger perspective and focus on the correlation of content and keywords rather than rankings. This will take SEO campaigns above the metrics of keywords and rankings and the focus will be on other quality metrics like CTR , conversions, bounce rate, etc. which will improve the quality of the web overall as the websites besides being rich in content will have to focus on good landing pages, a proper call to action, page load speed and good navigation which will ensure a better UX .

This lack of data will draw the line of distinction between a PPC campaign and a SEO campaign. The quality metrics will be CR and the CTR which again will make the client focus on content and the landing page design which will again be a quality step towards a better web world rather that discussing about keywords the client will be open to discuss about content and design.

Have shared my views also on http://blog.webpro.in/2011/11/search-queries-googles-encrypted-not.html

.png)