Introduction

In the dynamic world of SEO, content creation stands as the cornerstone of any successful strategy. Crafting engaging, informative content, however, is no small feat. From selecting the right topic to refining language and adapting grammar for specific audiences, the content creation process involves numerous intricate steps.

This process is not only challenging but also time-consuming, requiring extensive research, statistics, and a deep understanding of the target audience.

The Challenge of Content Creation

Creating compelling content involves several key elements, including topic selection, drafting, organization under sub-headings, proofreading, and tailoring language to specific geographic locations.

Each of these steps demands careful attention and considerable time investment. Moreover, staying updated with research, statistics, and opinions from thought leaders in the industry adds an additional layer of complexity.

The Role of AI in Content Creation

Enter Artificial Intelligence (AI), a revolutionary force that has reshaped various industries, including content creation. One noteworthy AI tool in this domain is ChatGPT. Developed by OpenAI, ChatGPT is a Generative Pre-trained Transformer, specifically designed for generating human-like text with a focus on conversational applications.

Unveiling ChatGPT

Understanding the Model

The acronym GPT in "ChatGPT" stands for "Generative Pre-trained Transformer." Trained on a diverse dataset from the internet, this AI language model has the ability to generate responses based on patterns learned during training. The "Chat" in ChatGPT signifies its application in creating text for conversational purposes.

Capabilities of ChatGPT

ChatGPT serves as a versatile tool for content creators. It can quickly provide answers to queries that would typically require hours or even days of internet research.

Additionally, the tool can check grammar, adapt language to either UK or US English, translate content into various languages, and even suggest topics for future blog posts.

These capabilities make ChatGPT an invaluable assistant, enhancing productivity and efficiency in the content creation process.

The Impact on Productivity

Utilizing ChatGPT or other AI tools presents a significant opportunity for content writers to boost productivity.

By automating certain aspects of the content creation workflow, writers can allocate more time to creative thinking and refining the quality of their output. The efficiency gains provided by AI tools can lead to a more streamlined and effective content creation process.

Overcoming Writer's Block

One of the most substantial advantages of integrating ChatGPT into the content creation process is its ability to help writers overcome the dreaded writer's block.

When faced with creative stagnation, writers can engage in a conversation with ChatGPT, leveraging its capacity to offer prompts, suggestions, and a conducive conversational space to jumpstart creativity.

Responsible Use of ChatGPT

While ChatGPT offers remarkable assistance in content creation, it is imperative to use it responsibly and understand its limitations.

Rather than viewing it as a complete replacement for human creativity and expertise, it should be seen as a powerful complement.

Regular review, fact-checking, and human intervention remain essential to ensure the production of high-quality, accurate content.

The Limitations of ChatGPT

Inability to Generate Personal Experiences

One notable limitation of ChatGPT is its inability to generate personal experiences. Lacking firsthand knowledge, opinions, or unique insights, the model relies solely on patterns learned from data.

Content creators should be mindful of this constraint and not expect the model to provide insights based on lived experiences.

Behind the Scenes: How ChatGPT Generates Answers

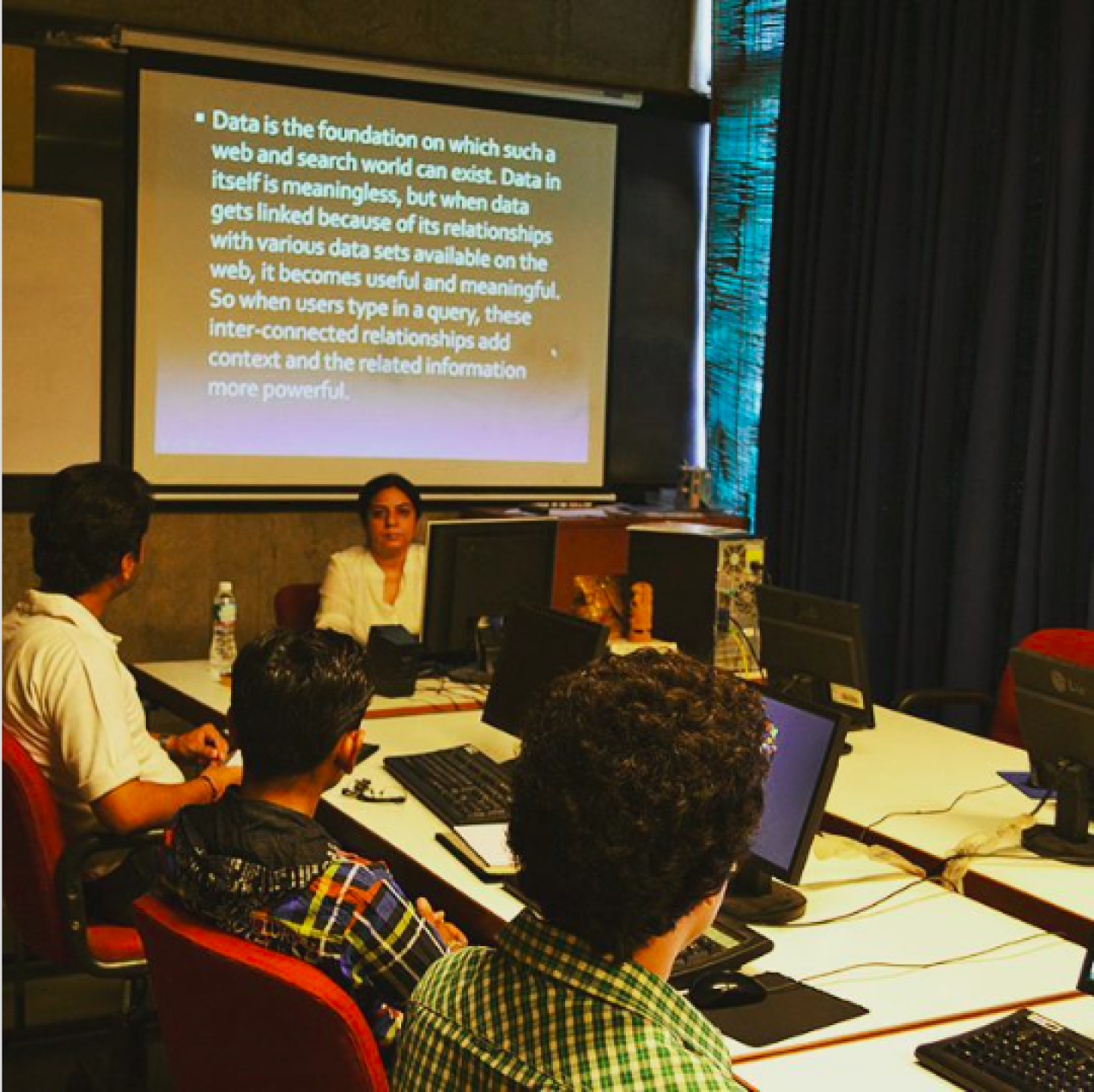

ChatGPT derives its answers from the patterns ingrained during its training on a diverse internet dataset. The model, based on the Generative Pre-trained Transformer architecture, excels at predicting the next word in a sentence given the context. This training process enables the model to capture grammar, semantics, and some aspects of reasoning.

Contrary to real-time internet access, ChatGPT does not fetch answers on-demand. Instead, it relies on the knowledge embedded in its parameters from the training data. While it can provide information on a wide array of topics, users must be cautious, as the model may not always have the most up-to-date or accurate information. Independent verification of critical information remains a best practice.

Conclusion

In the realm of content creation, harnessing the brilliance of AI, particularly through tools like ChatGPT, is undeniably a smart move. The efficiency gains, creative assistance, and the ability to overcome writer's block make AI a valuable asset for content writers.

However, the responsible use of such tools is paramount, and content creators should continue to exercise human judgment, ensuring that the content generated aligns with their standards of accuracy, relevance, and quality.

As AI becomes more sophisticated, it will not only assist in mundane tasks but also act as a catalyst for creativity. Future content creation workflows will witness a quantum leap in creative expression, with AI serving as a source of inspiration, ideation, and even challenging creators to push the boundaries of conventional storytelling.

In essence, the future of content creation, shaped by the ongoing evolution of AI, promises a paradigm shift in how we conceive, produce, and consume information. Content creators embracing this wave of technological advancement will find themselves at the forefront of a new era in digital communication, where the collaboration between human ingenuity and artificial intelligence propels creativity to unprecedented heights.

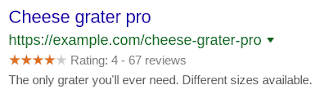

Google posted on the webmaster blog today that they have updated the review rich rules for how and when it shows the reviews rich results. Search results that are enhanced by review rich results can be extremely helpful when searching for products or services (the scores and/or “stars” you sometimes see alongside search results).

Google said that to make the review rich results more helpful and meaningful, they are now introducing algorithmic updates to reviews in rich results.

Google posted on the webmaster blog today that they have updated the review rich rules for how and when it shows the reviews rich results. Search results that are enhanced by review rich results can be extremely helpful when searching for products or services (the scores and/or “stars” you sometimes see alongside search results).

Google said that to make the review rich results more helpful and meaningful, they are now introducing algorithmic updates to reviews in rich results.

The main takeaway from this is that if the functionality of posting the reviews on the site is such that they can be moderated or updated then they will not be shown. This applies to even the reviews posted via the third party widgets.

The main takeaway from this is that if the functionality of posting the reviews on the site is such that they can be moderated or updated then they will not be shown. This applies to even the reviews posted via the third party widgets.